Global cooperation on regulations and research is at the centre of the UK government’s ambitions for the first AI Safety Summit in November. A set of five priorities has been produced for the event, with a focus on the security and alignment of next-generation foundation AI models such as OpenAI’s GPT-5 or Google’s Gemini.

Prime Minister Rishi Sunak announced plans for a global summit on the risks posed by AI earlier this year. Officials have since been wrangling academics, AI labs, tech companies and other governments to come to the event in November. It is set to be held at the home of computer science, Bletchley Park near Milton Keynes.

Leading AI labs including OpenAI, Google DeepMind and Anthropic are expected to be at the event and have previously agreed to make future frontier AI models available for safety research. These are the next-generation large language, generative and foundation models that are expected to significantly outperform the current best-in-class.

Taking place on 1–2 November, Secretary of State Michelle Donelan has kick-started the process of negotiations with companies and officials. Donelan also held a roundtable with a cross-section of civil society groups last week to try and assuage fears the summit would focus on and support Big Tech at the expense of other groups.

The Department for Science, Innovation and Technology (DSIT) is organising the summit and says it will focus on the risks created or exacerbated by the most powerful AI systems, including those yet to be released. Delegates at the summit will also debate the implications, and mitigation methods, for the most dangerous capabilities of these new models including access to information that could undermine security.

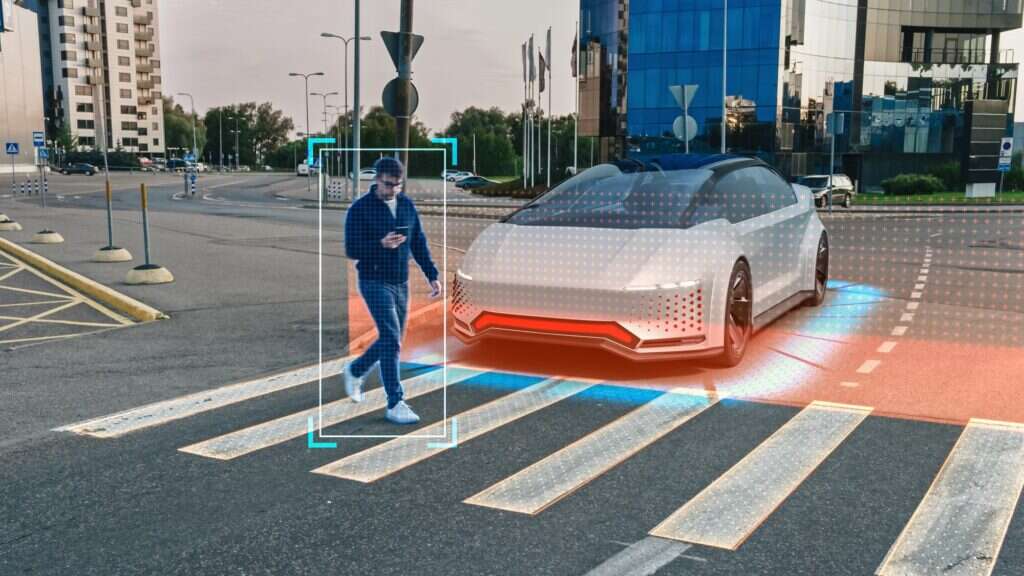

The flip side is that it will also focus on the ways safe AI can be used for public good and to improve people’s lives. This includes medical technology, improved transport safety and workplace efficiencies. There are five objectives the government hopes will be addressed.

UK AI Safety Summit: the core objectives

The objects include creating a shared understanding of the risks posed by frontier AI, as well as a need for action on those risks. A forward process for international collaboration on frontier AI safety is also an objective, including how to support the creation of national and international frameworks to deliver the collaboration.

Outside of global cooperation efforts, the objectives include creating measures organisations have to take to increase frontier AI safety and areas for collaboration on AI safety research, some of which are already under way. This will include ways to evaluate model capabilities and develop global standards to support governance and regulation.

The DSIT select committee recently published a report urging the government to speed up the adoption of AI regulations or risk being left behind. In the report, the MPs reject the need for a pause on the development of next-generation foundation AI models but urge the government to speed up legislation. “Without a serious, rapid and effective effort to establish the right governance frameworks – and to ensure a leading role in international initiatives – other jurisdictions will steal a march and the frameworks that they lay down may become the default even if they are less effective than what the UK can offer.”

Many countries, international organisations like the EU and UN, as well as the leading AI labs and civil society groups are already working on AI safety research. The Trades Union Congress recently formed its own task force to address workers’ rights in relation to AI. The OECD, Global Partnership on AI (GPAI) and G7 are building standards, and so the UK hopes to capitalise on this and create global regulatory consensus through the summit.

Professor Gina Neff, executive director of the Minderoo Centre for Technology and Democracy at the University of Cambridge, and co-chair of the new TUC AI task force, said responsible and trustworthy AI can bring huge benefits, but laws have to ensure it works for all. “AI safety isn’t just a challenge for the future and it isn’t just a technical problem,” Neff said. “These are issues that both employers and workers are facing now, and they need the help from researchers, policymakers and civil society to build the capacity to get this right for society.”